2. Revisiting Foundations of Human Rights Law in a Digital Context

Outline of chapter

This chapter revisits the basics of international human rights law while introducing how human rights law functions in the digital environment and AI systems. More specifically, this chapter addresses:

- values and principles underlying human rights law and the sources of human rights law (sections 2.1, 2.2)

- how human rights law progressively develops, including in the digital sphere and AI systems (section 2.3)

- the requirements according to which restrictions to human rights are lawful under human rights law (section 2.4)

- how human rights may be protected in different ways under different treaties, instruments and regimes (section 2.5)

- what the personal and territorial scope of States’ obligations under human rights law is (section 2.6)

- what the human rights obligations of business actors are (section 2.7)

- whether the use of certain technologies and/or AI Systems is incompatible with the exercise of human rights (section 2.8)

Basic understanding of human rights law is a pre-requisite

- Please make sure to refresh your existing knowledge on human rights (read respective chapter from an International Law textbook; for example, Klabbers, International Law, 2016, ch 6)

- Chapter on International Human Rights Law, in S González Hauck et al., Public International Law: A Multi-perspective Approach (Routledge 2024) 531-616

(2.1) Values and Principles of International Human Rights Law

(2.1.1) Ideals of human rights law: Universal Declaration of Human Rights Law

Certain of these aspirations and ideals are embodied in the first international instrument on human rights – the Universal Declaration of Human Rights (UDHR). Although the UDHR is non-binding, it is a notable document of political and normative significance.

Whereas recognition of the inherent dignity and of the equal and inalienable rights of all members of the human family is the foundation of freedom, justice and peace in the world (preamble, para 1)

All human beings are born free and equal in dignity and rights.

They are endowed with reason and conscience and should act towards one another in a spirit of brotherhood (Article 1 UDHR)

(2.1.2) Universality and interdependence of human rights law

A cardinal tenet of human rights law is that all human rights are universal, indivisible and interdependent and interrelated. All human rights are of equal value (there is no hierarchy) and the effective exercise of one depends on another. According to the 1993 Vienna Declaration and Programme of Action

5. All human rights are universal, indivisible and interdependent and interrelated. The international community must treat human rights globally in a fair and equal manner, on the same footing, and with the same emphasis. While the significance of national and regional particularities and various historical, cultural and religious backgrounds must be borne in mind, it is the duty of States, regardless of their political, economic and cultural systems, to promote and protect all human rights and fundamental freedoms.

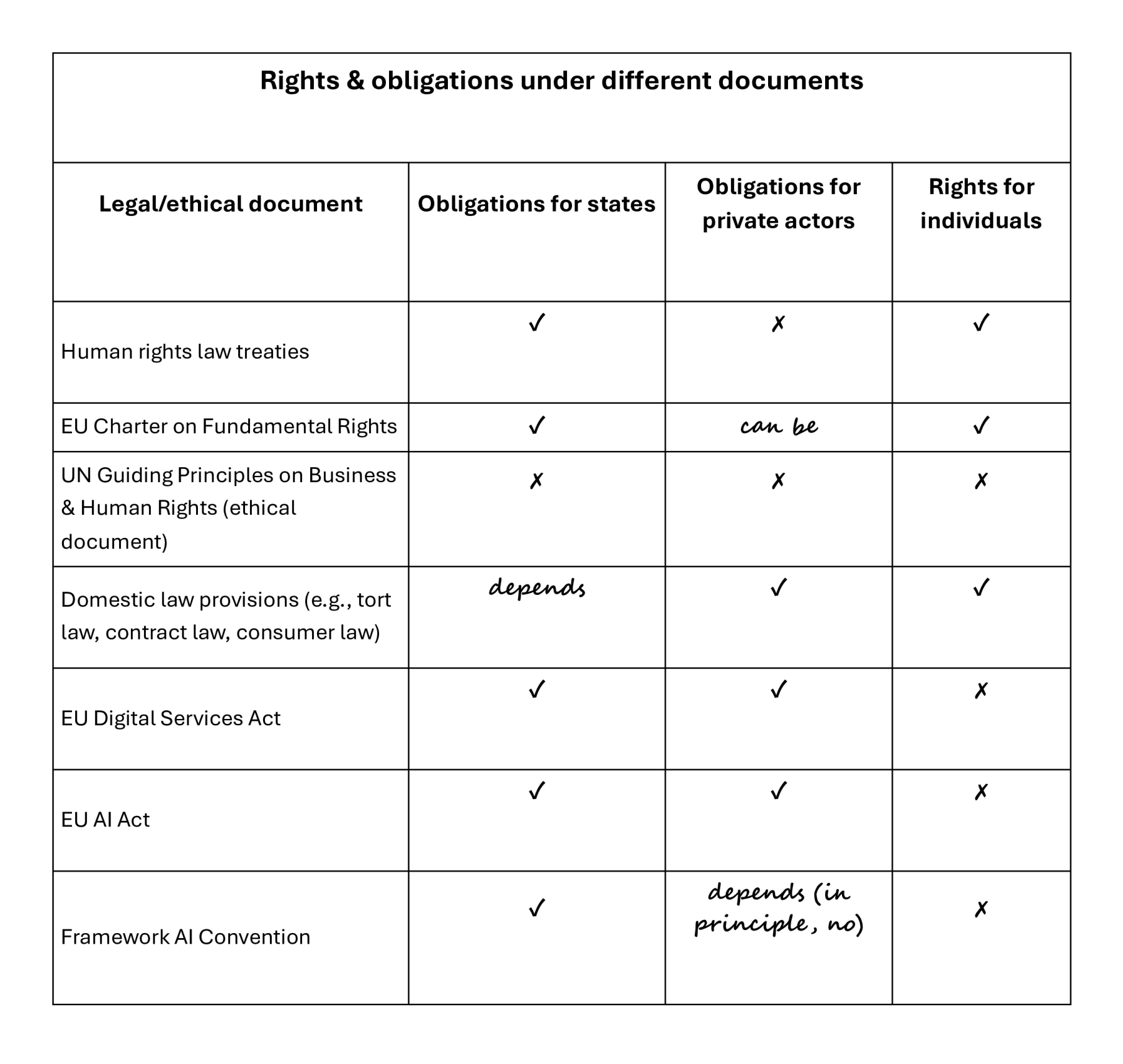

(2.2) Sources of International Human Rights Law

The sources of human rights law answer the question of ‘where do we go to “find” human rights law?’. Since international human rights law is a branch of international law, they share the same sources. The three main sources are: a) international treaties (also known as conventions, covenants, agreements); b) customary international law; c) and general principles of law. These days international treaties monopolise our focus since they have developed human rights exponentially and in a detailed fashion. There are two more sources (known as subsidiary sources) which do not create law but help us interpret and apply the law: judgments by national and international courts and influential scholarly work. Finally, other authorities may not be law-creating or legally binding, but they contribute to the development of human rights (e.g., resolutions by the UN General Assembly or by the Parliamentary Assembly of the Council of Europe, General Comments by UN human rights treaty bodies).

(2.2.1) Article 38(1) of the International Court of Justice Statute

According to Article 38(1) of the International Court of Justice Statute, which is accepted by States to reflect the sources of international law:

The Court, whose function is to decide in accordance with international law such disputes as are submitted to it, shall apply:

a. international conventions, whether general or particular, establishing rules expressly recognized by the contesting states;

b. international custom, as evidence of a general practice accepted as law;

c. the general principles of law recognized by civilized nations;

d. subject to the provisions of Article 59, judicial decisions and the teachings of the most highly qualified publicists of the various nations, as subsidiary means for the determination of rules of law.

(2.3) Techniques for the Progressive Development of Human Rights Law

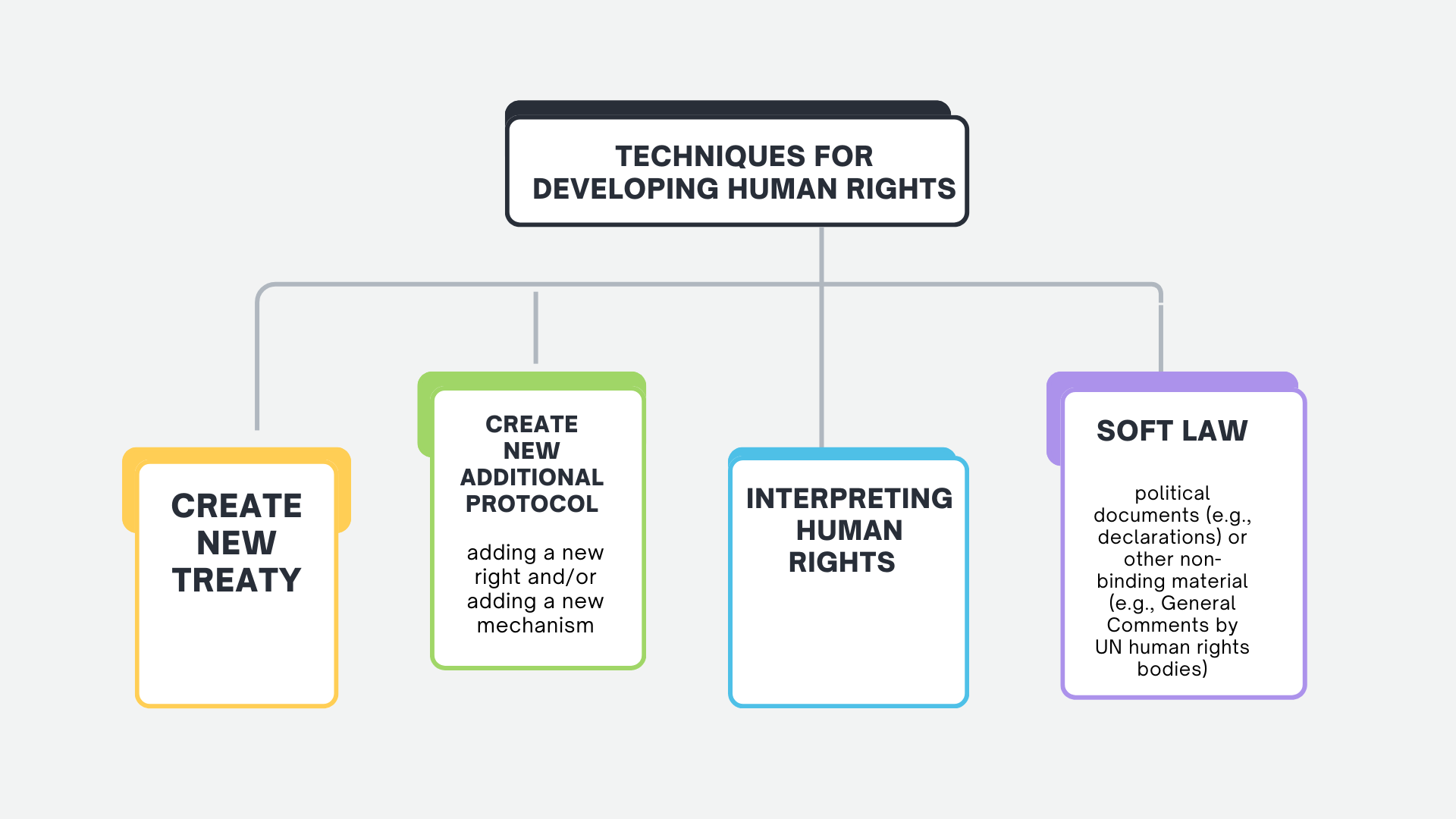

Human rights law progresses in many ways. Looking back, the development of human rights over the last 80 years has been extraordinary – that is if we take the 1948 UDHR as a critical milestone. The obvious question to ask is how it is possible for human rights law to continuously evolve notwithstanding that many human rights treaties date from the ’60s and ’70s. The techniques and tools that are available to develop human rights may be summarised as follows.

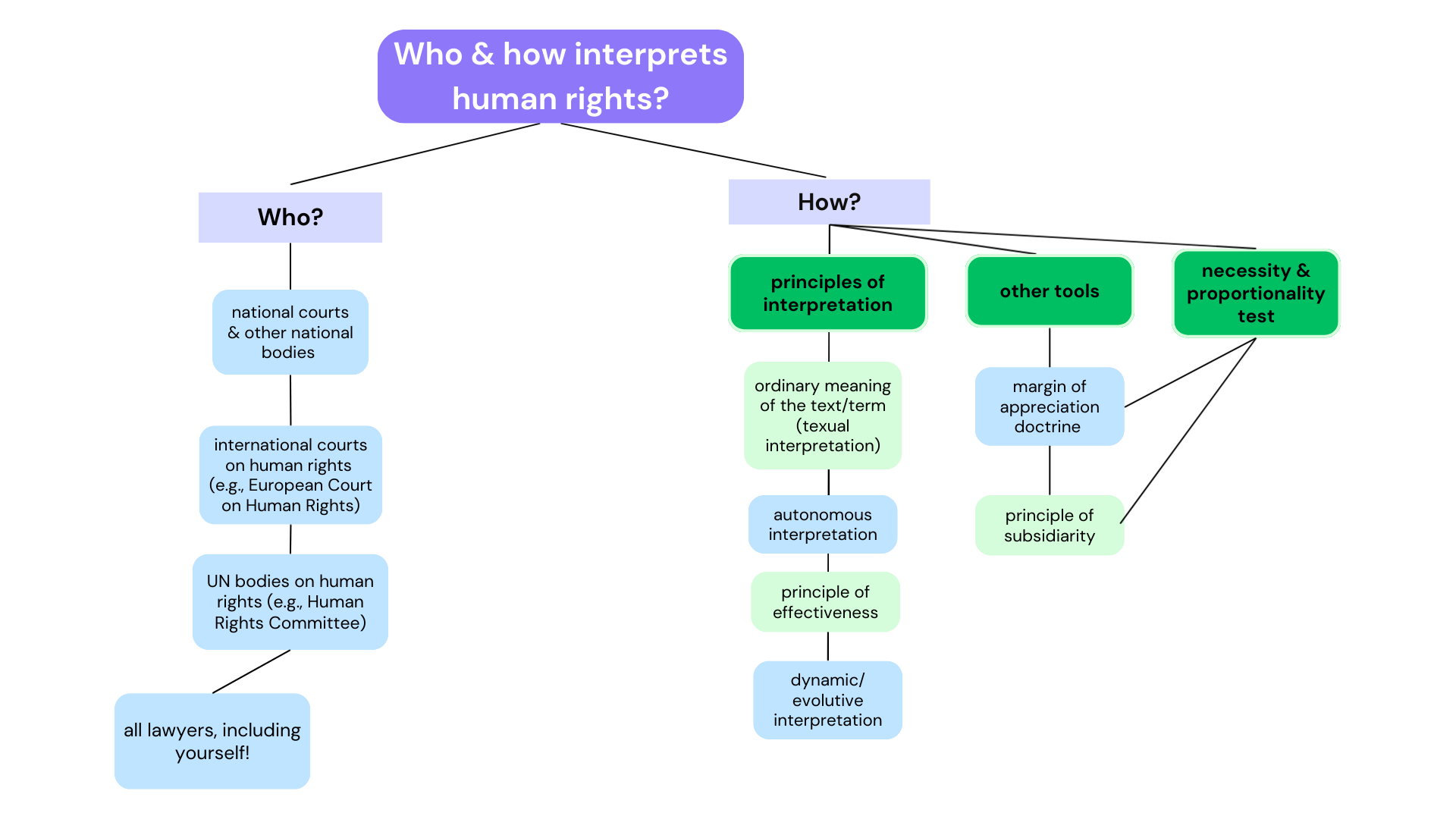

(2.3.1) Principles of interpretation in human rights law

The European Court of Human Rights (and other international human rights courts and bodies) applies a series of principles of interpretation which are not found in the text of human rights treaties.

(2.3.2) Developing existing human rights law to apply in the digital sphere and AI systems

Watch the video:

(2.3.2.1) Human rights law applies offline and online

UN Human Rights Council, Res 20/08, The promotion, protection and enjoyment of human rights on the Internet, 16 July 2012

1. Affirms that the same rights that people have offline must also be protected online.

(2.3.2.2) Human rights must be respected throughout the life cycle of AI systems

UNGA Res 78/265, Seizing the opportunities of safe, secure and trustworthy artificial intelligence systems for sustainable development, UN Doc A/RES/78/265, 1 April 2024

5. Emphasizes that human rights and fundamental freedoms must be respected, protected and promoted throughout the life cycle of artificial intelligence systems, calls upon all Member States and, where applicable, other stakeholders to refrain from or cease the use of artificial intelligence systems that are impossible to operate in compliance with international human rights law or that pose undue risks to the enjoyment of human rights, especially of those who are in vulnerable situations […]

[6] […] (k) Promoting transparency, predictability, reliability and understandability throughout the life cycle of artificial intelligence systems that make or support decisions impacting end-users, including providing notice and explanation, and promoting human oversight, such as, for example, through review of automated decisions and related processes or, where appropriate and relevant, human decision-making alternatives or effective redress and accountability for those adversely impacted by automated decisions of artificial intelligence systems;

(l) Strengthening investment in developing and implementing effective safeguards, including risk and impact assessments, throughout the life cycle of artificial intelligence systems to protect the exercise of and mitigate against the potential impact on the full and effective enjoyment of human rights and fundamental freedoms;

[…]

(2.3.2.3) General Comments by UN human rights treaty bodies developing the scope of existing rights

General Comments are not legally binding. However, they are an authoritative tool for promoting clarity on, and expanding the scope of, UN human rights treaties.

Committee on the Rights of the Child, General Comment No 25 (2021) on children’s rights in relation to the digital environment, 2 March 2021

7. In the present general comment, the Committee explains how States parties should implement the Convention in relation to the digital environment and provides guidance on relevant legislative, policy and other measures to ensure full compliance with their obligations under the Convention and the Optional Protocols thereto in the light of the opportunities, risks and challenges in promoting, respecting, protecting and fulfilling all children’s rights in the digital environment.

[…]

42. States parties should prohibit by law the profiling or targeting of children of any age for commercial purposes on the basis of a digital record of their actual or inferred characteristics, including group or collective data, targeting by association or affinity profiling. Practices that rely on neuromarketing, emotional analytics, immersive advertising and advertising in virtual and augmented reality environments to promote products, applications and services should also be prohibited from engagement directly or indirectly with children.

(2.3.2.4) New treaties on human rights and AI

Council of Europe, Framework Convention on AI, Human Rights, Democracy and the Rule of Law, CETS No 225 (concluded 5 September 2024)

A noteworthy development is the recent conclusion of the Council of Europe Framework Convention on AI, Human Rights, Democracy and the Rule of Law (AI Convention). This is the first international treaty regulating AI with its main aim to ensure that AI activities are fully consistent with human rights as well as democracy and the rule of law. The AI Convention does not create new human rights, but it reinforces the role of existing human rights law in addressing AI challenges. The choice of a legally binding international instrument came, however, at a trade-off. Being a framework convention, it establishes broad commitments for state parties leaving more detailed regulation to subsequent agreements, non-binding instruments, or national legislation.

Article 1 – Object and purpose

1 The provisions of this Convention aim to ensure that activities within the lifecycle of artificial intelligence systems are fully consistent with human rights, democracy and the rule of law.

2 Each Party shall adopt or maintain appropriate legislative, administrative or other measures to give effect to the provisions set out in this Convention. These measures shall be graduated and differentiated as may be necessary in view of the severity and probability of the occurrence of adverse impacts on human rights, democracy and the rule of law throughout the lifecycle of artificial intelligence systems. This may include specific or horizontal measures that apply irrespective of the type of technology used.

3 In order to ensure effective implementation of its provisions by the Parties, this Convention establishes a follow-up mechanism and provides for international co-operation.

Article 4 – Protection of human rights

Each Party shall adopt or maintain measures to ensure that the activities within the lifecycle of artificial intelligence systems are consistent with obligations to protect human rights, as enshrined in applicable international law and in its domestic law.

Article 5 – Integrity of democratic processes and respect for the rule of law

1 Each Party shall adopt or maintain measures that seek to ensure that artificial intelligence systems are not used to undermine the integrity, independence and effectiveness of democratic institutions and processes, including the principle of the separation of powers, respect for judicial independence and access to justice.

2 Each Party shall adopt or maintain measures that seek to protect its democratic processes in the context of activities within the lifecycle of artificial intelligence systems, including individuals’ fair access to and participation in public debate, as well as their ability to freely form opinions

(2.3.2.5) Criticism to the AI Convention for not including new rights

Explanatory memorandum by Ms Thórhildur Sunna Ævarsdóttir, Rapporteur, Committee on Legal Affairs and Human Rights, Report, Draft Framework Convention on Artificial Intelligence, Human Rights, Democracy and the Rule of Law, Doc. 15971, 16 April 2024

38. […] I believe that the drafters have missed an opportunity to include new rights, rights that could flow from already existing rights (human dignity, personal autonomy, right to respect for private life) but adapted or specifically tailored to an AI context. For instance, when examining the brain-computer interface technology, the Assembly called on member States to consider establishing new “neurorights”, such as cognitive liberty, mental privacy and mental integrity.

(2.3.2.6) Ethical-based frameworks and the human rights-based approach to AI

A Rachovitsa, ‘AI and Human Rights’, in C Kerrigan (ed), Artificial Intelligence – Law and Regulation (2nd ed, Edward Elgar, 2025)

Until recently, the relevance of human rights law to risks associated with AI did not feature as a primary concern in policy and law-making. There are more than 50 AI governance initiatives on a global and regional level, led by States, industry or multiple stakeholders most of which are non-binding. Certain notable, intergovernmental policy frameworks are the 2019 OECD Recommendation on Artificial Intelligence, the 2021 UNESCO Recommendation on the Ethics of Artificial Intelligence and the 2023 Hiroshima AI Process, which set out ethical principles for responsible stewardship of trustworthy AI.

A certain measure of convergence exists regarding these ethical principles. They typically feature transparency, explainability, accountability, safety and security. […] Some of these also refer to more human rights-like principles and concepts: the principles of fairness and non-discrimination; human-centred values; the right to privacy and data protection; and human-centricity in the design, development and deployment of advanced AI systems. These principles are undoubtedly valuable as they lay out ethical frameworks, build political consensus among States and other actors and even have a certain bearing on human rights protection. Yet, they fall short of bringing international human rights law to the foreground. […] The above policy initiatives reference ethical, non-binding principles and do not explicitly refer to human rights law per se which consists of the rights of individuals and respective obligations of States as well as remedies and redress.

Questions

- Do we need new digital human rights tailored to digital circumstances and/or AI systems? Can you think of specific examples of novel human rights?

- Evaluate advantages and disadvantages of concluding a new treaty on human rights and new technologies/AI (e.g., the AI Convention) vis-à-vis progressively developing human rights law in other ways.

- Why the AI Convention purports to protect human rights as well as democracy and the rule of law? Can you think of examples of algorithmic harms that may be not captured by “human rights law” but may be covered by “democracy” or “rule of law”?

(2.4) Restricting Human Rights

States may interfere with the effective exercise of a given human right and restrict it. Human rights are not absolute and may be subject to restrictions, as indicated in human rights treaties.

To take an example, the right to respect for private and family life is protected in the ECHR by Article 8:

1. Everyone has the right to respect for his private and family life, his home and his correspondence.

2. There shall be no interference by a public authority with the exercise of this right except such as is in accordance with the law and is necessary in a democratic society in the interests of national security, public safety or the economic well-being of the country, for the prevention of disorder or crime, for the protection of health or morals, or for the protection of the rights and freedoms of others.

Paragraph 1 of the provision formulates the rule, the protective scope of the right. Paragraph 2 provides the exception to the rule, namely the requirements for an interference with the exercise of this right to be lawful:

a) it needs to be ‘in accordance with the law’

b) it needs to serve one of the specifically mentioned legitimate aims, namely ‘in the interests of national security, public safety or the economic well-being of the country, for the prevention of disorder or crime, for the protection of health or morals, or for the protection of the rights and freedoms of others’

c) it needs to be ‘necessary in a democratic society’.

Therefore, a restriction to a given right may be imposed and is lawful under human rights law as long as said restriction is provided by law, serves one of the legitimate aims exhaustively enumerated in the respective provision and is necessary and proportionate to the aim that it is pursued. In most cases, the third requirement is decisive.

Exceptionally, certain human rights are not subject to restrictions. For example, the right to be free from torture, inhuman or degrading treatment or punishment or the right to freedom of thought.

(2.5) Different protection of human rights under different treaties, instruments and regimes

The protection of human rights differs across different treaties. One reason is that treaties differ as to their material scope, since they include different rights in their text. Moreover, there is the possibility that two treaties/instruments contain the same right but the protective scope of that right or the requirements for restricting it are formulated in different ways. It is also significant how courts and other bodies interpret and apply the treaties that they supervise. For example, the UN Human Rights Committee’s approach to interpreting ‘everyone’ under the ICCPR is to include only physical persons whereas the ECtHR’s approach is to interpret ‘everyone’ under the ECHR to include both physical persons and legal entities. One needs to be aware and cautious of how a given human right may enjoy different levels of protection across different treaties/regimes, especially when assessing the necessity and proportionality of a restriction and the factors that are weighed therein.

1. The provisions of this Charter are addressed to the institutions, bodies, offices and agencies of the Union with due regard for the principle of subsidiarity and to the Member States only when they are implementing Union law. They shall therefore respect the rights, observe the principles and promote the application thereof in accordance with their respective powers and respecting the limits of the powers of the Union as conferred on it in the Treaties.

2. The Charter does not extend the field of application of Union law beyond the powers of the Union or establish any new power or task for the Union, or modify powers and tasks as defined in the Treaties.

Article 52 EU Charter – Scope and interpretation of rights and principles

1. Any limitation on the exercise of the rights and freedoms recognised by this Charter must be provided for by law and respect the essence of those rights and freedoms. Subject to the principle of proportionality, limitations may be made only if they are necessary and genuinely meet objectives of general interest recognised by the Union or the need to protect the rights and freedoms of others.

2. Rights recognised by this Charter for which provision is made in the Treaties shall be exercised under the conditions and within the limits defined by those Treaties. […]

Article 53 EU Charter – Level of protection

Nothing in this Charter shall be interpreted as restricting or adversely affecting human rights and fundamental freedoms as recognised, in their respective fields of application, by Union law and international law and by international agreements to which the Union or all the Member States are party, including the European Convention for the Protection of Human Rights and Fundamental Freedoms, and by the Member States’ constitutions.

(2.6) Scope of Human Rights Obligations

This section clarifies two aspects of the scope of human rights obligations, namely the personal scope and territorial scope.

(2.6.1) Personal scope of human rights obligations

The personal scope of human rights obligations answers two questions:

- Who has the right to be protected?

- Who bears the responsibility to protect human rights?

(2.6.1.1) States are bound to protect the human rights of individuals

The answer to the question of who has the right to be protected in human rights law is: all individuals. The answer to the question of who bears the responsibility to protect human rights is that States and state authorities are responsible for protecting human rights. This means that States are not, in principle, responsible for protecting individuals from private actors. Human rights law essentially sets up and regulates a specific relationship between individuals and the State.

Each State Party to the present Covenant undertakes to respect and to ensure to all individuals within its territory and subject to its jurisdiction the rights recognized in the present Covenant, without distinction of any kind, such as race, colour, sex, language, religion, political or other opinion, national or social origin, property, birth or other status.

The High Contracting Parties shall secure to everyone within their jurisdiction the rights and freedoms defined in Section I of this Convention.

(2.6.1.2) Holding States responsible for acts/omissions of non-state actors – the due diligence standard

Although human rights obligations bound only States, in certain circumstances, States may be required to enact special measures to protect human rights also from the actions/omissions of private actors. Human rights bodies and courts have developed their approaches as to when States have such a due diligence obligation.

(2.6.1.2.1) The UN Human Rights Committee regarding State parties’ obligations to exercise due diligence

UN Human Rights Committee, General Comment No 31 on the nature of the general legal obligation imposed on States Parties to the Covenant: International Covenant on Civil and Political Rights (2004)

8. The article 2, paragraph 1, obligations are binding on States and do not, as such, have direct horizontal effect as a matter of international law. The Covenant cannot be viewed as a substitute for domestic criminal or civil law. However the positive obligations on States Parties to ensure Covenant rights will only be fully discharged if individuals are protected by the State, not just against violations of Covenant rights by its agents, but also against acts committed by private persons or entities that would impair the enjoyment of Covenant rights in so far as they are amenable to application between private persons or entities take appropriate measures or to exercise due diligence to prevent, punish, investigate or redress the harm caused by such acts by private persons or entities.

(2.6.1.2.2) The European Court of Human Rights develops the “Osman test” on due diligence

ECtHR, Osman v United Kingdom, App no 23452/94, 28 October 1998 (Grand Chamber)

115. The Court notes that the first sentence of Article 2 § 1 enjoins the State not only to refrain from the intentional and unlawful taking of life, but also to take appropriate steps to safeguard the lives of those within its jurisdiction […]. It is common ground that the State’s obligation in this respect extends beyond its primary duty to secure the right to life by putting in place effective criminal-law provisions to deter the commission of offences against the person backed up by law-enforcement machinery for the prevention, suppression and sanctioning of breaches of such provisions. It is thus accepted by those appearing before the Court that Article 2 of the Convention may also imply in certain well-defined circumstances a positive obligation on the authorities to take preventive operational measures to protect an individual whose life is at risk from the criminal acts of another individual. […]

116. For the Court and bearing in mind the difficulties involved in policing modern societies, the unpredictability of human conduct and the operational choices which must be made in terms of priorities and resources, such an obligation must be interpreted in a way which does not impose an impossible or disproportionate burden on the authorities.

[…]

In the opinion of the Court where there is an allegation that the authorities have violated their positive obligation to protect the right to life in the context of their above-mentioned duty to prevent and suppress offences against the person […], it must be established to its satisfaction that the authorities knew or ought to have known at the time of the existence of a real and immediate risk to the life of an identified individual or individuals from the criminal acts of a third party and that they failed to take measures within the scope of their powers which, judged reasonably, might have been expected to avoid that risk. […]

(2.6.1.2.3) The Court of Justice of the Economic Community of West African States fleshes out the due diligence standard

According to the Court, States’ obligation to take measures includes the obligation to introduce legislation, create bodies and procedures to implement legislation and take concrete measures for preventing harm or ensuring accountability, if such harm has occurred.

Court of Justice of the Economic Community of West African States (ECOWAS), SERAP v Federal Republic of Nigeria, Judgment no ECW/CCJ/JUD/18/12, 14 December 2012

[…]

95. From the submissions of both Parties, it has emerged that the Niger Delta is endowed with arable land and water which the communities use for their social and economic needs; several multinational and Nigerian companies have carried along oil prospection as well as oil exploitation which caused and continue to cause damage to the quality and productivity of the soil and water; the oil spillage, which is the result of various factors including pipeline corrosion, vandalisation, bunkering, etc. appears for both sides as the major source and cause of ecological pollution in the region. It is a key point that the Federal Republic of Nigeria has admitted that there has been in Niger Delta occurrences of oil spillage with devastating impact on the environment and the livelihood of the population throughout the time.

[…]

98. As such, the heart of the dispute is to determine whether in the circumstances referred to, the attitude of the Federal Republic of Nigeria, as a party to the African Charter on Human and Peoples’ Rights, is in conformity with the obligations subscribed to in the terms of Article 24 of the said instrument, which provides: ˝All peoples shall have the right to a general satisfactory environment favourable to their development˝.

99. The scope of such a provision must be looked for in relation to Article 1 of the Charter, which provides: ˝The Member States of the Organization of African Unity parties to the present Charter shall recognise the rights, duties and freedoms enshrined in this Charter and shall undertake to adopt legislative or other measures to give effect to them. ˝

[…]

101. Article 24 of the Charter thus requires every State to take every measure to maintain the quality of the environment understood as an integrated whole, such that the state of the environment may satisfy the human beings who live there, and enhance their sustainable development.

[…]

103. Among these measures, the Court takes note of the numerous laws passed to regulate the extractive oil and gas industry and safeguard their effects on the environment, the creation of agencies to ensure the implementation of the legislation, and the allocation to the region, 13% of resources produced there, to be used for its development.

[…]

105. This means that the adoption of the legislation, no matter how advanced it may be, or the creation of agencies inspired by the world’s best models, as well as the allocation of financial resources in equitable amounts, may still fall short of compliance with international obligations in matters of environmental protection if these measures just remain on paper and are not accompanied by additional and concrete measures aimed at preventing the occurrence of damage or ensuring accountability, with the effective reparation of the environmental damage suffered.

[…]

111. And it is precisely this omission to act, to prevent damage to the environment and to make accountable the offenders, who feel free to carry on their harmful activities, with clear expectation of impunity, that characterises the violation by the Federal Republic of Nigeria of its international obligations under Articles 1 and 24 of the African Charter on Human and Peoples’ Rights.

[…]

(2.6.2) Territorial scope of human rights obligations

The territorial scope of human rights obligations clarifies where human rights need to be protected. It seems straightforward that a human rights treaty applies within the territory of a State party. But what about situations outside the territory of a State party? Are there scenarios that a State may be responsible for protecting human rights outside of its territory?

(2.6.2.1) Article 2(1) International Covenant on Civil and Political Rights

Each State Party to the present Covenant undertakes to respect and to ensure to all individuals within its territory and subject to its jurisdiction the rights recognized in the present Covenant.

(2.6.2.2) UN Human Rights Committee, General Comment No 31 (2004)

10. States Parties are required by article 2, paragraph 1, to respect and to ensure the Covenant rights to all persons who may be within their territory and to all persons subject to their jurisdiction. This means that a State party must respect and ensure the rights laid down in the Covenant to anyone within the power or effective control of that State Party, even if not situated within the territory of the State Party.

(2.6.2.3) UN Human Rights Council, Res 42/15, The Right to Privacy in the Digital Age, 26 September 2019

Emphasizing that unlawful or arbitrary surveillance and/or interception of communications, the unlawful or arbitrary collection of personal data or unlawful or arbitrary hacking and the unlawful or arbitrary use of biometric technologies, as highly intrusive acts, violate or abuse the right to privacy, can interfere with other human rights, including the right to freedom of expression and to hold opinions without interference, and the right to freedom of peaceful assembly and association, and may contradict the tenets of a democratic society, including when undertaken extraterritorially or on a mass scale

(2.6.2.4) Article 1 European Convention on Human Rights

The High Contracting Parties shall secure to everyone within their jurisdiction the rights and freedoms defined in Section I of this Convention.

(2.6.2.5) The ECtHR’s first opportunity to consider and decide whether an interference with an applicant’s electronic communications falls within the jurisdiction of a State

ECtHR, Wieder and Guarnieri v United Kingdom, App nos 64371/16 and 64407/16, 12 September 2023

1. The principal issue to be addressed in the present case is whether, for the purposes of a complaint under Article 8 of the Convention, persons outside a Contracting State fall within its territorial jurisdiction if their electronic communications were (or were at risk of being) intercepted, searched and examined by that State’s intelligence agencies operating within its borders.

[…]

6. The first applicant is an IT professional and independent researcher. He has worked for commercial data centres and news organisations.

7. The second applicant is a privacy and security researcher and the creator of an open source malware analysis system. He has researched and published extensively on privacy and surveillance with Der Spiegel and The Intercept.

[…]

12. The applicants in the present case lodged applications with the [Investigatory Powers Tribunal (IPT)] with the aid of a standard application form made available on Privacy International’s website. They alleged that the respondent Government and/or the security services had breached Articles 8 and 10 of the Convention because they had and/or continued to intercept, solicit, obtain, process, use, store and/or retain their information and/or communications; and because their information and/or communications were accessible to the respondent Government as part of datasets maintained wholly or in part by other Governments’ intelligence agencies; and that the Government and/or security services might have acted unlawfully under domestic law by intercepting, soliciting, accessing, obtaining, processing, storing or retaining their information and/or communications in breach of their own internal policies and procedures.

[…]

74. The Government asserted that the interception of communications by a Contracting State did not fall within that State’s jurisdictional competence for the purposes of Article 1 of the Convention when the sender or recipient complaining of a breach of their Article 8 rights was outside the territory of the Contracting State.

75. The Government argued that a State’s jurisdiction within the meaning of Article 1 of the Convention was primarily territorial. Any other basis of jurisdiction was exceptional and required special justification in the particular circumstances […]. In Al-Skeini and Others v. the United Kingdom [GC], no. 55721/07, §§ 133-42, ECHR 2011 the Grand Chamber had set out three exceptions to the territorial basis of jurisdiction: State agent authority and control; effective control over an area; and the Convention legal space (“espace juridique”). […]

76. For the Government, the interception of communications and related communications data would not involve the exercise of authority and control over the individual whose privacy was interfered with. Given that intercepted communications nevertheless continued on to the recipient, GCHQ could not be said to have exercised full authority and control over those communications, much less over the sender or recipient.

77. The Government further argued that [since] the applicants had not been physically present in the United Kingdom at any relevant point, any interference with their privacy or freedom of expression must have taken place outside the United Kingdom. In this regard, the Government disputed that the interference with the applicants’ rights under Article 8 of the Convention was the interception, extraction, filtering, storage, analysis and dissemination of intercepted content and related communications data. For the Government, a person’s private life was a matter of personal autonomy. Interferences with, and effects upon, his private life were therefore not abstract concepts which could be separated from the individual, but rather events which happened to the individual. That was so even if the originating cause of the impact or interference took place in a different State. The interference happened to the individual, and thus took place where the individual was located. […]

79. For the Government, there was nothing absurd about individuals outside the United Kingdom falling outside that State’s territorial jurisdiction. On the contrary, it was simply a natural consequence of the territorial nature of jurisdiction. The very fact that the Convention was not universal meant that jurisdictional lines had to be drawn, and some individuals would fall outside those lines. Such an outcome would not lead to the inevitable conclusion that controls over extraterritorial acts were lacking.

[…]

81. The applicants argued that their communications and/or related communications data fell within the United Kingdom’s jurisdiction for the purposes of Article 1 of the Convention. In their opinion, where interception, storage, processing and interrogation of communications was carried out by the Contracting State on its own territory, it fell within its jurisdictional competence for two reasons.

82. First, where a Contracting State intercepted communications and/or related communications data within its own borders, the resulting interference with Convention rights was within that State’s jurisdiction, even if the victim was abroad at the moment of interference. For the applicants, this was consistent with the Court’s approach to jurisdiction in respect of other Convention rights, including Article 1 of Protocol No. 1 […], Article 6 […], Article 13 […] and Article 5 […]. They argued that the same approach should apply under Article 8 of the Convention; as with the interference with property, when “correspondence” was intercepted, opened, and read by a Contracting State, the interference took place within the jurisdiction of that State. Any other outcome would render Convention rights illusory in practice.

83. Secondly, the applicants contended that the activity fell within the scope of one of the recognised exceptions to territoriality. When a State carried on secret surveillance in its territory it exercised authority and control over the victim whose communications were intercepted. In the United Kingdom, surveillance was carried out with legal authority and the intelligence agencies assumed full control over intercepted communications. There was no principled basis for holding that “State agent authority and control” required physical control and power over individuals abroad.

84. Finally, the applicants submitted that the consequences would be absurd if they were not within the United Kingdom’s jurisdiction for the purposes of Article 1 of the Convention merely because they were not present within its territory at the moment when interception occurred. It would mean that Contracting States could conduct mass surveillance of everyone outside their territory, including their own citizens and citizens of all other Council of Europe Contracting States, and share intelligence obtained in respect of those individuals, without complying with any of the safeguards required by Article 8 of the Convention. It would also mean that if the communications of a person habitually resident in the United Kingdom were intercepted while he was temporarily out of the country, and analysed after his return, the State would have jurisdiction in respect of the analysis but not in respect of the original interception. There was no rational basis for this distinction, which made little sense in view of the fact that the proliferation of online communications had deprived national borders of their meaning.

[…]

(β) Application of the general principles to the facts of the present case

88. To date, the Court has not had the opportunity to consider the question of jurisdiction in the context of a complaint concerning an interference with an applicant’s electronic communications. In Bosak and Others v. Croatia (nos. 40429/14 and 3 others, 6 June 2019) the Court did not consider whether the interception of the communications of the two applicants who were living in the Netherlands fell within Croatia’s jurisdiction for the purposes of Article 1 of the Convention, perhaps because those applicants’ telephone conversations were intercepted and recorded by the Croatian authorities on the basis of secret surveillance orders lawfully issued against another applicant, who lived in Croatia and with whom they had been in contact. While the question of jurisdiction was alluded to in Weber and Saravia v. Germany (dec.), no. 54934/00, § 72, ECHR 2006-XI and in Big Brother Watch and Others [..], in neither case was it necessary to decide the issue.

89. The applicants in the present case have not suggested that they were themselves at any relevant time in the United Kingdom or in an area over which the United Kingdom exercised effective control. Rather, they contend either that the acts complained of – being the interception, extraction, filtering, storage, analysis and dissemination of their communications by the United Kingdom intelligence agencies pursuant to the section 8(4) [of the Regulation of Investigatory Powers Act 2000] regime […] – nevertheless fell within the respondent Government’s territorial jurisdiction, or, in the alternative, that one of the exceptions to the principle of territoriality applied.

90. In Big Brother Watch and Others the Court identified four stages to the bulk interception process: the interception and initial retention of communications and related communications data; the searching of the retained communications and related communications data through the application of specific selectors; the examination of selected communications/related communications data by analysts; and the subsequent retention of data and use of the “final product”, including the sharing of data with third parties […]. Although it did not consider that the interception and initial retention constituted a particularly significant interference, in its view the degree of interference with individuals’ Article 8 rights increased as the bulk interception process progressed […]. The principal interference with the Article 8 rights of the sender or recipient was therefore the searching, examination and use of the intercepted communications.

91. In the context of the section 8(4) regime each of the steps which constituted an interference with the privacy of electronic communications, being the interception and, more particularly, the searching, examining and subsequent use of those intercepted communications, were carried out by the United Kingdom intelligence agencies acting – to the best of the Court’s knowledge – within United Kingdom territory.

92. It is the Government’s contention that any interference with the applicants’ private lives occasioned by the interception, storage, searching and examination of their electronic communications could not be separated from their person and would therefore have produced effects only where they themselves were located – that is, outside the territory of the United Kingdom.

[…]

94. Turning to the facts of the case at hand, the interception of communications and the subsequent searching, examination and use of those communications interferes both with the privacy of the sender and/or recipient, and with the privacy of the communications themselves. Under the section 8(4) regime the interference with the privacy of communications clearly takes place where those communications are intercepted, searched, examined and used and the resulting injury to the privacy rights of the sender and/or recipient will also take place there.

95. Accordingly, the Court considers that the interference with the applicants’ rights under Article 8 of the Convention took place within the United Kingdom and therefore fell within the territorial jurisdiction of the respondent State. As such, it is not necessary to consider whether any of the exceptions to the territoriality principle are applicable.

(2.7) Are Private/Business Actors Responsible for Human Rights Violations?

Private actors, including business actors, can play a significant role in ensuring the effective exercise of human rights. The greatest part of the technological infrastructure is being designed, developed, deployed in the realm of privatisation via a web of contracts and without public law guarantees. Despite the corporations’ paramount role, their activities are very little grounded in (human rights) law. Private actors and corporations are not bound by human rights treaty obligations. Corporations are expected to abide by non-binding frameworks concerning the mitigation of the human rights’ impacts of their activities, such as the UN Guiding Principles on Business and Human Rights. Yet business corporations are still bound by the domestic law of the State within the jurisdiction of which they operate and provide their products/services. This means that human rights related issues may come into play before domestic courts and that corporations may have obligations pursuant to legislation introduced by domestic law (or EU law).

(2.7.1) The UN Guiding Principles on Business and Human Rights

You may read the UN Guiding Principles on Business and Human Rights.

(2.7.1.1) Report of the Special Rapporteur on the promotion and protection of the right to freedom of opinion and expression, Freedom of expression, states and the private sector in the digital age, 11 May 2016

9. Human rights law does not as a general matter directly govern the activities or responsibilities of private business. A variety of initiatives provide guidance to enterprises to ensure compliance with fundamental rights. The Human Rights Council endorsed the Guiding Principles on Business and Human Rights: Implementing the United Nations “Protect, Respect and Remedy” Framework (see A/HRC/17/4 and A/HRC/17/31). Reflecting existing human rights law, the Guiding Principles reaffirm that States must ensure that not only State organs but also businesses under their jurisdiction respect human rights.

10. The Guiding Principles provide a framework for the consideration of private business responsibilities in the information and communications technology sector worldwide, independent of State obligations or implementation of those obligations. For instance, the Guiding Principles assert a global responsibility for businesses to avoid causing or contributing to adverse human rights impacts through their own activities, and to address such impacts when they occur and seek to prevent or mitigate adverse human rights impacts that are directly linked to their operations, products or services by their business relationships, even if they have not contributed to those impacts.

11. Due diligence, according to the Guiding Principles, enables businesses to identify, prevent, mitigate and account for how they address their adverse human rights impacts […] In the digital environment, human rights impacts may arise in internal decisions on how to respond to government requests to restrict content or access customer information, the adoption of terms of service, design and engineering choices that implicate security and privacy, and decisions to provide or terminate services in a particular market.

12. As a matter of transparency, the Guiding Principles state that businesses should be prepared to communicate how they address their human rights impacts externally, particularly when concerns are raised by or on behalf of affected stakeholders […] Meaningful disclosures shed light on, among other things, the volume and context of government requests for content removals and customer data, the processes for handling such requests, and interpretations of relevant laws, policies and regulations. Corporate transparency obligations may also include a duty to disclose processes and reporting relating to terms of service enforcement and private requests for content regulation and user data.

13. Finally, the responsibility to respect involves attention to the availability of remedies — from moral remedies to compensation and guarantees of non-repetition — when the private actor has caused or contributed to adverse impacts.

(2.7.1.2) Business and Human Rights in Technology Project

According to the Business and Human Rights in Technology Project (B-Tech Project), which tailors the UN Guiding Principles to digital technologies, due diligence should apply to the conceptualisation, design and testing phases of new products – as well as the underlying data sets and algorithms.

You can read more about the B-Tech Project here.

(2.7.2) Privatisation of the Effective Exercise of Human Rights

Due to the private nature of the products and serviced offered, the effective exercise of human rights is also subject to privatisation. An individual does not have a legal claim vis-à-vis a business actor for protecting/violating their human rights – human rights apply only in the relationship between State-individual. The relationship between an individual and a business actor is subject to other areas of law, such as contract or tort law. The terms of service of a product/service that we use qualifies as a contract.

(2.7.2.1) Terms of Service

Report of the Special Rapporteur on the promotion and protection of the right to freedom of opinion and expression, Freedom of expression, states and the private sector in the digital age, 11 May 2016

52. Terms of service, which individuals typically must accept as a condition to access a platform, often contain restrictions on content that may be shared. These restrictions are formulated under local laws and regulations and reflect similar prohibitions, including those against harassment, hate speech, promotion of criminal activity, gratuitous violence and direct threats. Terms of service are frequently formulated in such a general way that it may be difficult to predict with reasonable certainty what kinds of content may be restricted. The inconsistent enforcement of terms of service has also attracted public scrutiny. Some have argued that the world’s most popular platforms do not adequately address the needs and interests of vulnerable groups; for example, there have been accusations of reluctance “to engage directly with technology-related violence against women, until it becomes a public relations issue”. At the same time, platforms have been criticized for overzealous censorship of a wide range of legitimate but (perhaps to some audiences) “uncomfortable” expressions. Lack of an appeals process or poor communication by the company about why content was removed or an account deactivated adds to these concerns. Terms of service that require registration linked to an individual’s real name or evidence to demonstrate valid use of a pseudonym can also disproportionately inhibit the ability of vulnerable groups or civil society actors in closed societies to use online platforms for expression, association or advocacy

[…]

Remedies and enforcement of terms of service

69. To enforce terms of service, companies may not always have sufficient processes to appeal content removal or account deactivation decisions where a user believes the action was in error or the result of abusive flagging campaigns. Further research that examines best practices in how companies communicate terms of service enforcement decisions and how they implement appeals mechanisms may be useful.

[…]

71. The appropriate role of the State in supplementing or regulating corporate remedial mechanisms also requires closer analysis. Civil proceedings and other judicial redress are often available to consumers adversely affected by corporate action, but these are often cumbersome and expensive. Meaningful alternatives may include complaint and grievance mechanisms established and run by consumer protection bodies and industry regulators. Several States also mandate internal remedial or grievance mechanisms: India, for example, requires corporations that possess, deal with or handle sensitive personal data to designate grievance officers to address “any discrepancies and grievances […] with respect to processing of information”.

For example, if you watch YouTube, your rights are conditioned to the YouTube’s Terms of Service. These terms of Service consist of a series of terms and policies:

Applicable Terms

Your use of the Service is subject to these terms, the YouTube Community Guidelines and the Policy, Safety and Copyright Policies (together, the “Agreement”). Your Agreement with us will also include the Advertising on YouTube Policies if you provide advertising or sponsorships to the Service or incorporate paid promotions in your Content.

Viewers and creators around the world use YouTube to express their ideas and opinions freely, and we believe that a broad range of perspectives ultimately makes us a stronger and more informed society, even if we disagree with some of those views. That’s why we have policies to help build a safer community.

- Community Guidelines

- Copyright

- Monetisation policies

- Legal removals

- Spam and deceptive practices (e.g., fake engagement)

- Sensitive content (e.g., child safety, nudity and sexual content, suicide and self-harm, vulgar language)

- Violent or dangerous content (e.g., harassment and cyberbullying, harmful or dangerous content, hate speech, violent criminal organisations, violent or graphic content)

- Misinformation (e.g., medical or elections misinformation)

(2.7.2.2) Creating novel private “adjudicative” bodies

A novel example of setting up external bodies to scrutinise technologies and AI systems is creation of the META Oversight Board. The Board decides appeals brought by individuals against META’s content enforcement decisions. Given the fact that these remedies belong in the realm of private governance, it is an open question whether they qualify as adequate and effective remedies.

Oversight Board Charter, September 2019

[…]

The purpose of this charter is to establish the framework for creating such an institution: the Oversight Board. The purpose of the board is to protect free expression by making principled, independent decisions about important pieces of content and by issuing policy advisory opinions on Facebook’s content policies. The board will operate transparently and its reasoning will be explained clearly to the public, while respecting the privacy and confidentiality of the people who use Facebook, Inc.’s services, including Instagram (collectively referred to as “Facebook”). It will provide an accessible opportunity for people to request its review and be heard.

[…]

Article 2. Authority to Review

People using Facebook’s services and Facebook itself may bring forward content for board review. The board will review and decide on content in accordance with Facebook’s content policies and values.

Section 2. Basis of decision-making

Facebook has a set of values that guide its content policies and decisions. The board will review content enforcement decisions and determine whether they were consistent with Facebook’s content policies and values. For each decision, any prior board decisions will have precedential value and should be viewed as highly persuasive when the facts, applicable policies, or other factors are substantially similar.

When reviewing decisions, the board will pay particular attention to the impact of removing content in light of human rights norms protecting free expression.

[…]

Decisions by the META Oversight Board are discussed in sections (4.3.3), (5.5.2).

(2.7.3) Corporations and their activities are subject to domestic law

(2.7.3.1) Civil litigation before domestic courts to enforce terms of service – How human rights law may still be partly relevant

Business actors/corporations are bound by the domestic law of the State within the jurisdiction of which they operate and provide their products/services. For instance, AI systems are deployed and used pursuant to contracts, such as, for example, contract terms, terms of services and policies of social media platforms which users accept when signing up for using their services. In case of a breach of contract, an individual may be able to bring civil proceedings before domestic courts under contract or tort law. In certain instances, human rights law may also come into play, subject to the specific requirements under domestic law, such as the standard of reasonableness/fairness under Dutch civil law (tort).

(2.7.3.1.1) Amsterdam District Court, FvD c.s. v Google Ireland Ltd, Case number C/13/704886 / KG ZA 21-621, Judgment in summary proceedings, 15 September 2021 (civil law) (in Dutch) (unofficial translation)

The case was brought by the Forum for Democracy (FvD), a Dutch political party.

3.2. FvD(s) [Forum for Democracy] based their claims in particular on the following statements:

– the expressions in the Video do not contain any content that may lead to significant risk of serious harm and, in that respect, do not violated the Terms of Service, the Community Guidelines and/or Covid Policies;

– insofar as the expressions are contrary to the views of the WHO and the RIVM, the policy to prohibit it goes too far, because it creates an impermissible interference with the freedom of expression of FvD et al., also against the background of YouTube’s monopoly position and claimant 3’s position as a politician. FvD is effectively silenced, making it impossible to criticize controversial government politics. Thus, by removing the Video, YouTube acted in violation of standards of reasonableness and fairness and unlawful towards FvD et al. The interests of FvD et al. in reinstating the Video outweigh YouTube’s right of ownership and the public health interests served by a strict application of the Covid policy.

3.3. Google puts forward a reasoned defence. It argues that YouTube was justified in removing the Video pursuant to the Terms of Service and the Covid Policy and further relies on its property rights and the right of freedom of business.

4 The assessment

4.1. First and foremost, YouTube and FvD et al are private parties. FvD et al. accepted the Terms of Service when creating their YouTube accounts. An agreement has been concluded between the parties, on the basis of which FvD et al. are in principle bound by the Terms of Service, the Community Guidelines and the Covid Policy.

4.2. Google rightly takes the position that the statements of claimant 3 … are at odds with various provisions … from the Covid Policy and can be regarded as a violation thereof. This applies in particular to the provisions dealing with face masks, social distancing and the comparison with influenza, as well as the provisions prohibiting claiming that vaccines do not reduce the risk of COVID-19 and claiming that ivermectin or hydroxychloroquine are effective treatments for Covid-19. Even taking into account the provocative style peculiar to claimant 3, the use of terms such as “a collective psychosis” “a flu variation, “utterly nonsensical distancing lines”, “experimental injections” versus “first-line drugs, such as ivermectin”, can only be seen as a violation of those provisions. Unlike FvD et al. argue, these expressions cannot (obviously) be seen as ‘imagery’. That claimant 3, as FvD et al. argue, “does not strictly speaking question the effectiveness of the distancing rules” can – also in light of his own statements, attitude in public and other public performances – hardly be taken seriously.

4.3. This leads to the (interim) conclusion that, for the time, the statements of claimant 3 in the Video are in breach of Covid Policy, and YouTube’s removal is in line with the Terms of Service and therefore in breach the agreement between the parties.

4.4. The foregoing does not alter the fact that there may be grounds to consider the removal of the Video unacceptable or unlawful according to the standards of reasonableness and fairness. In this context, FvD et al. have invoked their freedom of expression.

4.5. As considered in previous judgments of the judge in preliminary relief proceedings of this District Court1, Article 10 of the ECHR, which establishes the right to freedom of expression, may have an effect on private law relations, for example through the interpretation of open norms of private law, such as the obligation to act in accordance with the standards of reasonableness and fairness when performing a contract. The same applies to the open norm included in Article 6:162 of the Ducth Civil Code (tort): an act or omission contrary to “what is proper in social intercourse according to unwritten law” is also regarded as a tort. The court must assess whether these standards have been met. In that assessment, the following applies as a starting point.

4.6. Everyone’s right to freedom of expression is not unlimited and does not mean that Google is unquestionably bound to tolerate the statements of FvD et al. that violate its Covid policy on its platform. By virtue of its property rights and the right to freedom of enterprise, Google may, in principle, set rules that apply to its YouTube platform, including the rule that content that violates its Covid policy will be removed. Google explained that the Covid policy aims to prevent the spread of harmful and dangerous medical misinformation on YouTube. Since Google cannot enter into medical discussions itself, it aligns itself with the scientific consensus as it is propagated by the WHO and by national organizations such as the RIVM. The Covid policy is partly based on a European Union Communication of 10 June 2020 that aimed at combating misinformation about Covid-19, as well as the European Commission’s Code of Practice of October 2018 on combating online disinformation. With Google’s Covid policy following the guidelines of such health organizations and various governments, the starting point should be that it is not acting unreasonably, but on the contrary, that it is acting in a way that is “befitting unwritten law in society”.

4.7. Furthermore, everyone’s right to freedom of expression does not imply a right to the forum of his or her choice. FvD et al. acknowledges this in itself, but has emphasized the circumstance that YouTube has a huge reach and that research shows that a substantial part of the public also uses that channel for news coverage. According to this research, this applies in particular to younger people, who are an important part of the (potential) electorate of FvD and claimant 3. According to FvD et al., YouTube is in fact indispensable for bringing the critical views as expressed in the Video to the attention of this audience. Other social media are less suitable for this because of their design (you have to be a member of Facebook) and/or its volatile nature (Twitter, Instagram), or are relatively unknown (Bitchute and Utreon).

4.8. It should be accepted that FvD et al. being able to use a channel like YouTube is of great importance in today’s society to be able so as to convey a message. However, this does not mean that YouTube has a social ‘must-carry’ obligation for critical voices/political expressions. No such an obligation is included in the (European) proposal for the Digital Service Act (the Digital Service Act) either. In light of the ECHR’s Appleby judgment of May 6, 2003 2, the bar for government intervention, or the courts, in the freedom of internet platforms to moderate the content they publish is high. Only if the obstacles make “any effective exercise” impossible, or if “the essence of the right has been destroyed”, is the government (court) obliged to intervene. It is therefore only if the effective exercise of the freedom of expression is prevented, or if the essence of the right to freedom of expression is destroyed, that there is reason to intervene. That high bar is not met here.

[…]

(2.7.3.2) Corporations are subject to obligations introduced by EU law

An example of an obligation imposed on specific corporations (e.g., providers of very large online platforms and providers of very large online search engines) under EU law is:

Article 34(1), Risk Assessment, Digital Services Act Regulation

Providers of very large online platforms and of very large online search engines shall diligently identify, analyse and assess any systemic risks in the Union stemming from the design or functioning of their service and its related systems, including algorithmic systems, or from the use made of their services.

They shall carry out the risk assessments by the date of application referred to in Article 33(6), second subparagraph, and at least once every year thereafter, and in any event prior to deploying functionalities that are likely to have a critical impact on the risks identified pursuant to this Article. This risk assessment shall be specific to their services and proportionate to the systemic risks, taking into consideration their severity and probability, and shall include the following systemic risks:

(a) the dissemination of illegal content through their services;

(b) any actual or foreseeable negative effects for the exercise of fundamental rights, in particular the fundamental rights to human dignity enshrined in Article 1 of the Charter, to respect for private and family life enshrined in Article 7 of the Charter, to the protection of personal data enshrined in Article 8 of the Charter, to freedom of expression and information, including the freedom and pluralism of the media, enshrined in Article 11 of the Charter, to non-discrimination enshrined in Article 21 of the Charter, to respect for the rights of the child enshrined in Article 24 of the Charter and to a high-level of consumer protection enshrined in Article 38 of the Charter;

(c) any actual or foreseeable negative effects on civic discourse and electoral processes, and public security;

(d) any actual or foreseeable negative effects in relation to gender-based violence, the protection of public health and minors and serious negative consequences to the person’s physical and mental well-being

(2.8) Are Certain AI Systems Incompatible with the Exercise of Human Rights?

The development and/or use of certain AI systems by public and/or the private actors alike pose novel challenges to human autonomy and dignity. In certain cases, these technologies and systems may be impossible to operate in compliance with international human rights law or may pose undue risks to the enjoyment of human rights. Scenarios on prohibiting the use of certain AI systems and the different human rights that may be engaged are discussed in subsequent chapters (see (3.5.3), (4.4) and chapter 7).

States share some of their concerns in political documents in the UN General Assembly (2.8.1) and the UN Human Rights Council (2.8.2). However, there is distinctive reluctance in formulating consensus on the precise criteria for such systems to be incompatible with the exercise of human rights. The recently concluded Council of Europe, Framework Convention on AI, Human Rights, Democracy and the Rule of Law fell short of expectations in this regard (2.8.3). Article 16(6) leaves discretion to State parties to assess the need for banning certain AI systems and does not provide clear criteria or guidance to this end. The EU AI Act is the only legally binding instrument at the moment that prohibits the development and/or use of certain AI systems (2.8.4).

Although presently States are hesitant to pronounce (either under domestic or international law) certain technologies and AI systems in abstracto incompatible with human rights, these systems and practices may be found, in the specific circumstances of cases, disproportionate or unnecessary to the aims that they purport to serve.

(2.8.1) UNGA Res 78/265, Seizing the opportunities of safe, secure and trustworthy artificial intelligence systems for sustainable development, 1 April 2024, UN Doc A/RES/78/265

Emphasizes that human rights and fundamental freedoms must be respected, protected and promoted throughout the life cycle of artificial intelligence systems, calls upon all Member States and, where applicable, other stakeholders to refrain from or cease the use of artificial intelligence systems that are impossible to operate in compliance with international human rights law or that pose undue risks to the enjoyment of human rights, especially of those who are in vulnerable situations, and reaffirms that the same rights that people have offline must also be protected online, including throughout the life cycle of artificial intelligence system

[…]

(2.8.2) UN Human Rights Council, Res 54/21, The Right to Privacy in the Digital Age, UN Doc A/HRC/RES/54/21, 12 October 2023

3. Also recalls the increasing impact of new and emerging technologies, such as those developed in the fields of surveillance, artificial intelligence, automated decision-making and machine-learning, and of profiling, tracking and biometrics, including facial recognition, without human rights safeguards, present to the full enjoyment of the right to privacy and other human rights, and acknowledges that some applications may not be compatible with international human rights law

(2.8.3) Article 16(6) – Risk and impact management framework, Council of Europe, Framework Convention on AI, Human Rights, Democracy and the Rule of Law

Each Party shall assess the need for a moratorium or ban or other appropriate measures in respect of certain uses of artificial intelligence systems where it considers such uses incompatible with the respect for human rights, the functioning of democracy or the rule of law.

(2.8.4) Prohibited AI systems under the EU AI Act

The EU AI Act provides in Article 5 prohibited AI systems concerning the development and/or deployment of AI systems both in the public and private sectors. Such AI systems raise serious concerns due to their highly intrusive nature and broad impact on many people. Examples of such systems include aspects of:

- manipulation and exploitation of vulnerabilities (Article 5(1)(a) and (b))

- social scoring practices (Article 5(1)(c))

- predictive policing (Article 5(1)(d))

- creation or expansion of facial recognition databases through the untargeted scraping of facial images from the internet or CCTV footage (Article 5(1)(e))

- sentiment detection (Article 5(1)(f))

- biometric categorisation systems that categorise individuals based on their biometric data so as to deduce or infer sensitive characteristics (Article 5(1)(g))

- real-time remote biometric identification systems in publicly accessible spaces for law-enforcement purposes (Article 5(1)(h))

It needs to be clarified that the AI Act does not create rights for individuals. It rather imposes due diligence and transparency obligations on private and public actors when they develop and/or deploy certain AI systems.

The European Commission has published Guidelines on prohibited artificial intelligence practices established by the EU AI Act, C(2025) 884 final, 4 February 2025

GPT-4Chan, is a controversial Large Language Model (LLM) that was developed and deployed by YouTuber and AI researcher Yannic Kilcher in June 2022. The LLM generates text-based input having been trained on a dataset of millions of posts from the /pol/ board of 4chan, an anonymous online forum known for hosting hateful and extremist content. The model was trained to mimic the style and tone of /pol/ users, producing text that is often intentionally offensive to groups (racist, sexist, homophobic, etc.) and nihilistic.

- Further reading: A Kurenkov, Lessons from the GPT-4Chan Controversy, The Grandient, 12 June 2022

Do you think that the development of GPT-4Chan (and not merely its use/deployment) could be incompatible with human rights?

- K Yeung, Study on Responsibility and AI (Council of Europe, 2019)

- A Rachovitsa, ‘AI and Human Rights’, in C Kerrigan (ed), Artificial Intelligence – Law and Regulation (Edward Elgar, 2025)

- SA Teo, ‘How Artificial Intelligence Systems Challenge the Conceptual Foundations of the Human Rights Legal Framework’ (2022) 40 Nordic Journal of Human Rights 216

- L McGregor et al, ‘International Human Rights Law as a Framework for Algorithmic Accountability’ (2019) 68 International & Comparative Law Quarterly

- B Custers, ‘New Digital Rights: Imagining Additional Fundamental Rights for the Digital Era’ (2022) 44 Computer Law & Security Review 1

- E Aizenberg, J van der Hoven, ‘Designing for Human Rights in AI’, Jul–Dec 2020 Big Data & Society 1.

- A Quintavalla, J Temperman (eds), Artificial Intelligence and Human Rights (OUP 2023)

Feedback/Errata